Convex optimization

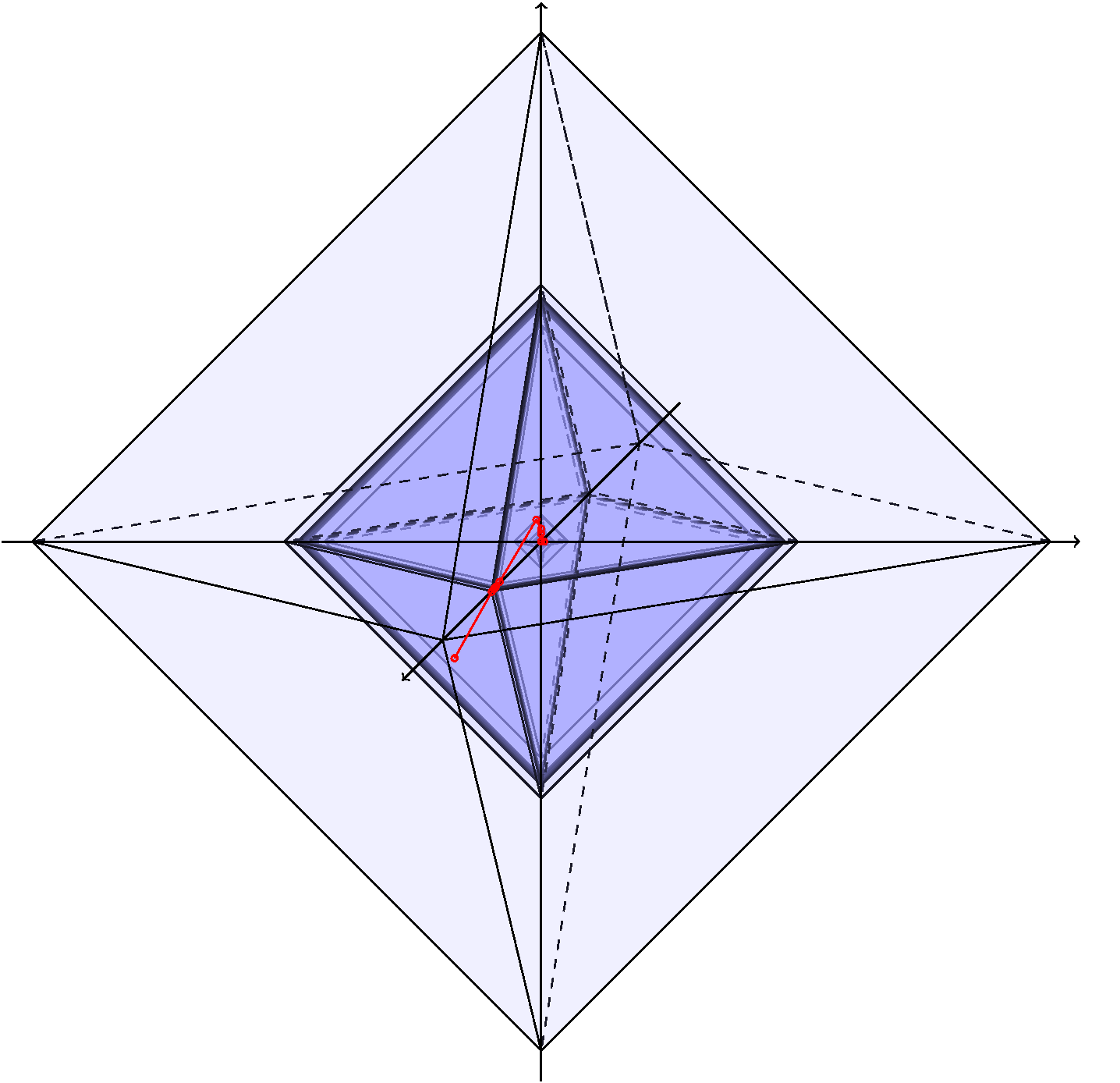

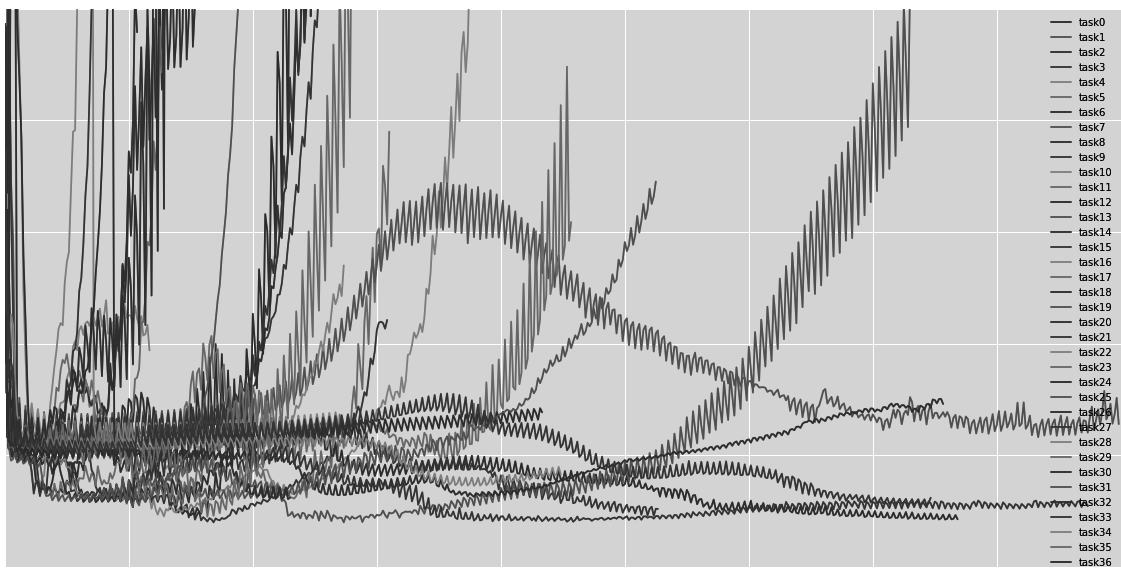

Accelerated first-order methods for regularization — FISTA

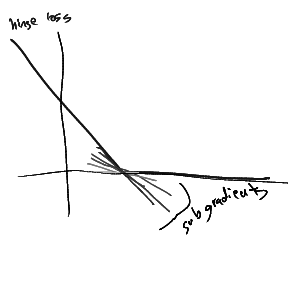

Subgradient — Subgradient generalizes the notion of gradient/derivative and quantifies the rate of change of non-differentiable/non-smooth function ([1], section 8.1 in [2]). For a real-valued function, the

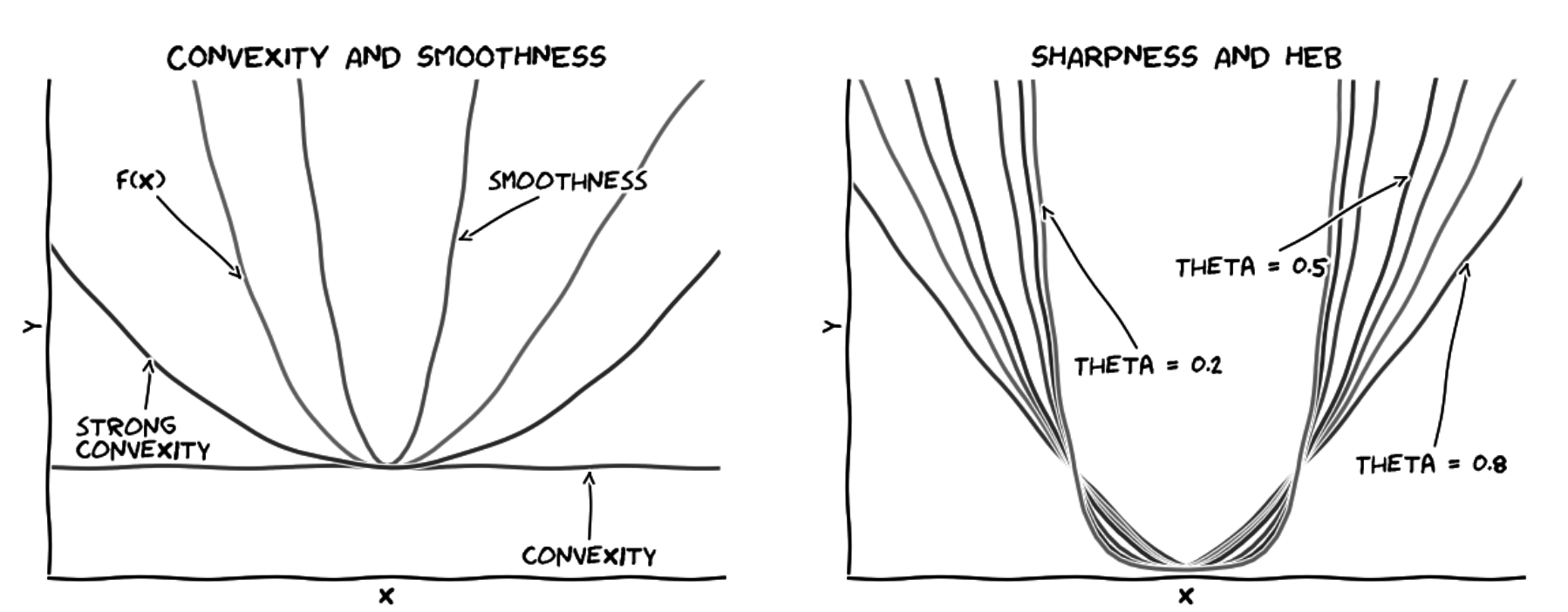

α-strong convexity, β-strong smoothness — Strong convexity often allows for optimization algorithms that converge very quickly to an ϵ-optimum (rf. FISTA and NESTA). This post will cover some fundamentals of

Obituaries: Harold Kuhn (1925–2014) —

Accelerating first-order methods — The lower bound on the oracle complexity of continuously differentiable, β-smooth convex function is O(1√ϵ) [Theorem 2.1.6, Nesterov04; Theorem 3.8, Bubeck14; Nesterov08]. General first-order gradient